In building Inductiv AI Labs, we’re not just building cutting-edge AI—we’re making a deliberate choice about how it should be built.

This manifesto outlines the core principles guiding our work: a commitment to privacy, a belief in transparency, and a strategy rooted in trust. We see these not as constraints, but as competitive advantages in a rapidly shifting AI landscape.

The Trust Deficit in AI

It’s mid 2025, and AI agents are ubiquitous. These systems now compose original music, draft legal briefs, and even accelerate drug discovery with unprecedented sophistication.

Yet beneath this technological marvel lies a fundamental challenge: the erosion of user privacy.

We’ve witnessed AI models inadvertently expose private medical records, retain sensitive prompts in training datasets, and leave users questioning whether their most confidential information remains secure.

At Inductiv, we believe this uncertainty represents more than a technical challenge—it’s an existential threat to AI’s promise as humanity’s collaborative partner.

The question isn’t whether AI can revolutionize industries. It’s whether we can build AI that organizations and individuals actually trust.

Engineering Trust from the Ground Up

Most AI companies approach privacy as a compliance checkbox—a layer of security added after core systems are built. We’ve adopted a fundamentally different path in how we develop our AI systems.

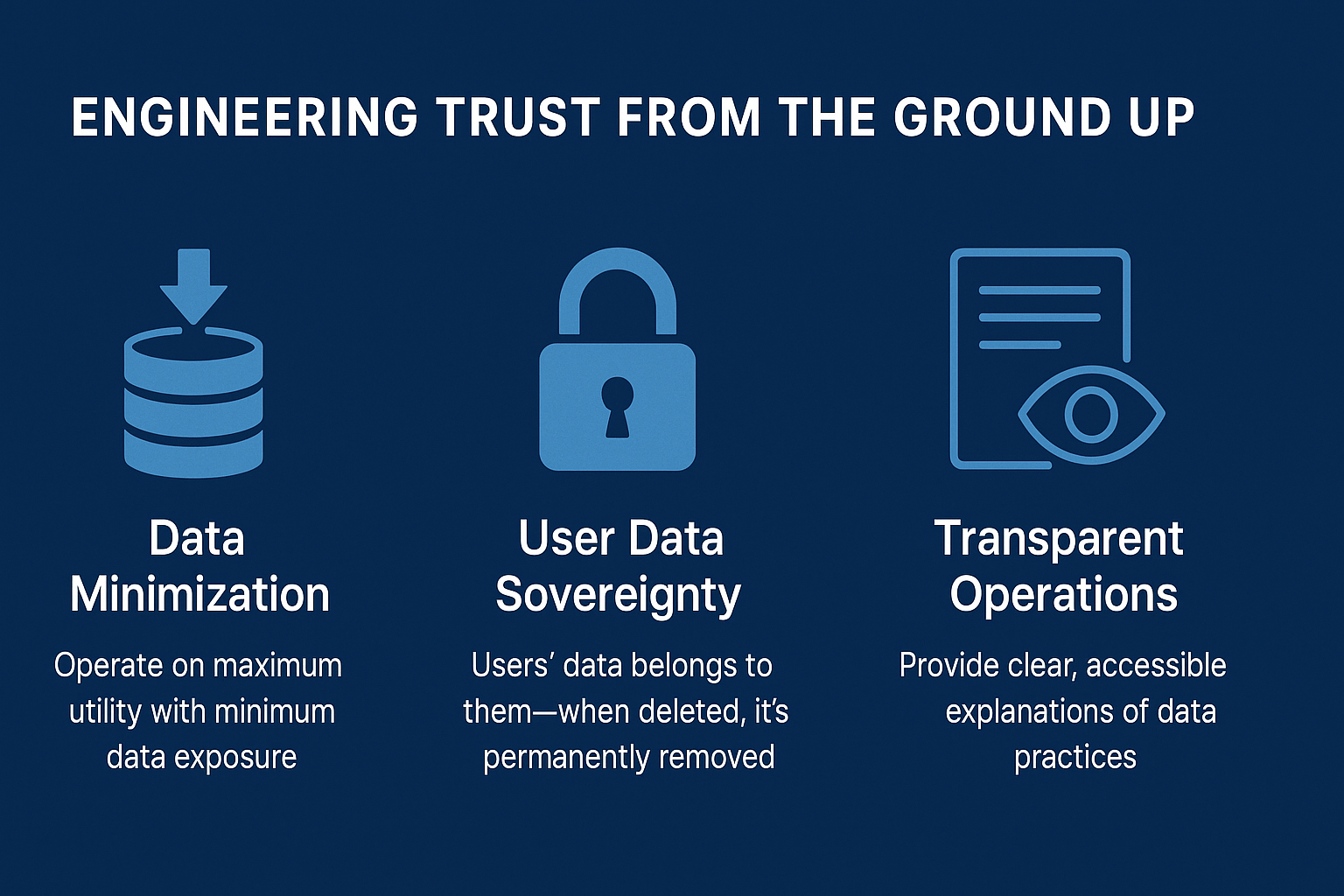

This philosophy guides every aspect of our AI development. It’s reflected in three guiding principles:

1. Data Minimization

We operate on a simple principle: maximum utility, minimum data. Instead of asking, “What data can we collect?”, we ask, “What’s the absolute minimum required?”

A writing assistant doesn’t need to know your location. A diagnostic AI shouldn’t access unrelated patient records. We apply strict constraints on data usage—by default.

2. User Data Sovereignty

We recognize that users’ data belongs to them—not to us, our investors, or third parties. When users choose to delete their information, it’s not only permanently removed from our systems, but it’s unlearned by the model itself. We treat deletion as a commitment, not a toggle.

3. Transparent Operations

Privacy shouldn’t be hidden in fine print.

We believe users deserve to understand how their data flows through our systems—how it’s stored, protected, and (when needed) deleted. Every product includes accessible explanations of our privacy architecture and security practices.

Trust-First AI: Our Strategic Advantage

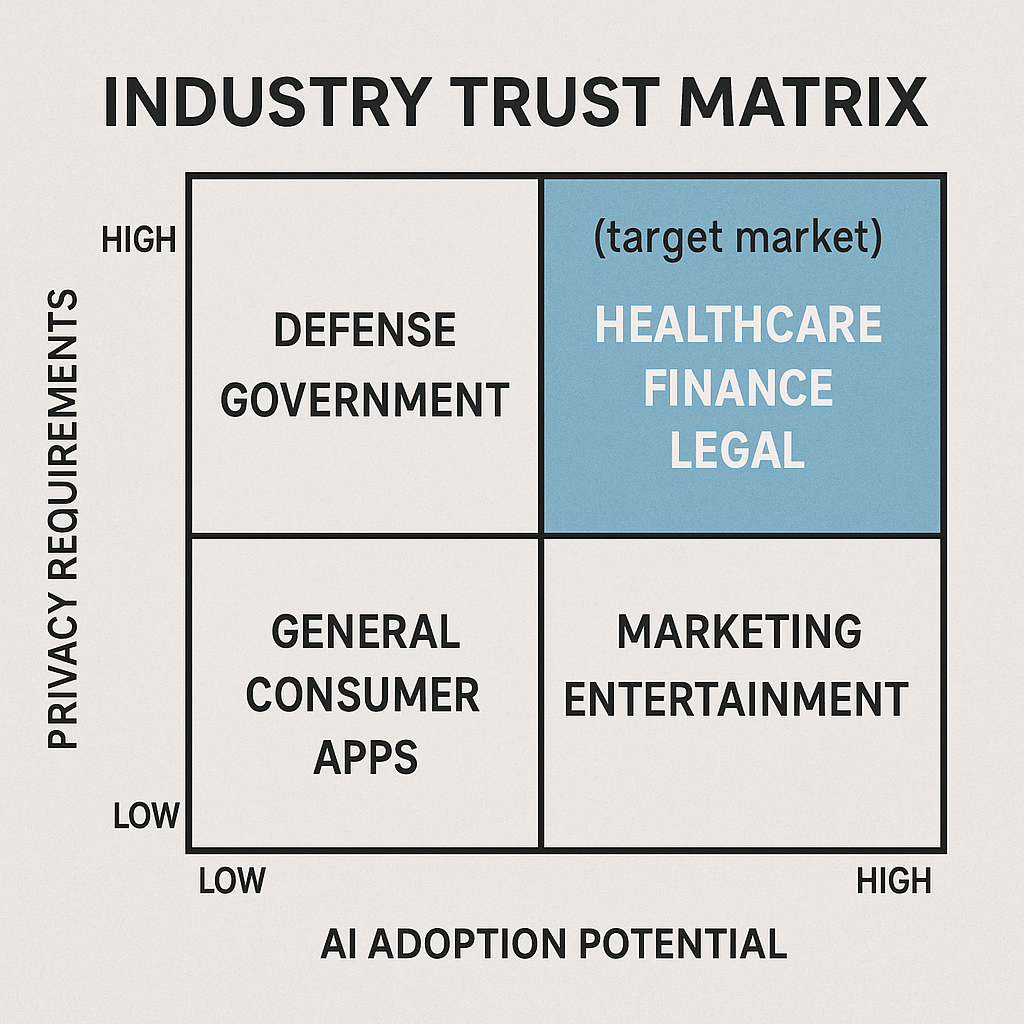

Designing AI systems around trust isn’t just the ethical path—it’s a strategic one. By embedding privacy and transparency into the core of our architecture, we’re unlocking opportunities in industries where trust isn’t optional—it’s everything.

Enabling High-Stakes Applications

Sectors like healthcare, finance, law, and public governance operate in high-trust environments where privacy failures can be catastrophic.

We’re building AI that meets the stringent standards of these industries—unlocking transformational benefits only possible when privacy is guaranteed.

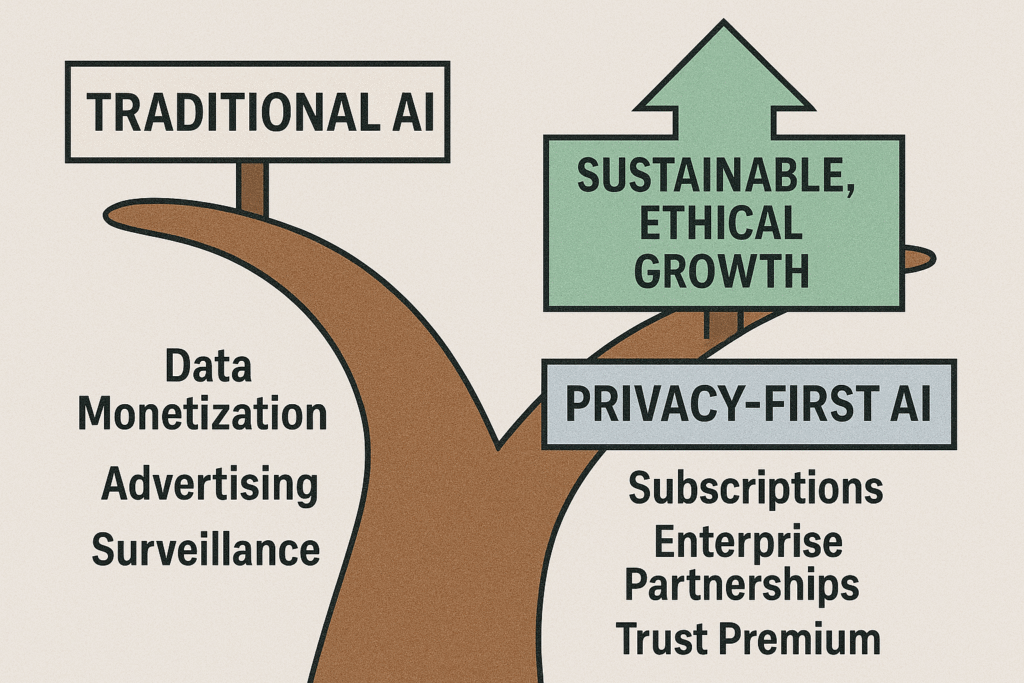

Building Sustainable Business Models

Rather than relying on data monetization or ad-driven growth, we’re building a business model grounded in user alignment:

- Subscription-based offerings

- Enterprise partnerships

- Custom AI deployments with transparent data governance

This ensures our incentives are always aligned with those we serve.

Privacy-first engineering demands more resources, deeper technical sophistication, and the discipline to reject shortcuts. But in a world where a single privacy breach can erase decades of brand equity and personal safety, “acceptable risk” is no longer acceptable.

Our Commitment

At Inductiv AI Labs, we aren’t just shipping products—we’re shaping the next era of responsible technology. Our foundational beliefs:

- Trust as the Foundation: The most transformative AI is the one that earns—and keeps—human trust.

- Privacy as an Innovation Catalyst: Privacy constraints force us to invent smarter, leaner, and more ethical systems.

- Transparency as Standard Practice: If an AI provider can’t clearly explain their architecture, they shouldn’t be trusted with sensitive data.

We’re not chasing headlines. We’re building the infrastructure for a new AI economy—one that respects users, empowers enterprises, and earns trust through action.

Because the future doesn’t belong to the most powerful systems. It belongs to the most principled ones.

Frequently Asked Questions (FAQ)

1. What is “Trust-First AI”?

Trust-First AI is an approach where privacy, transparency, and user control are engineered into the foundation of AI systems—not added later. At Inductiv AI Labs, it’s the principle that guides all our product development.

2. How does Inductiv AI protect user data?

We follow three core principles: Data Minimization (only collecting what’s absolutely necessary), User Data Sovereignty (users fully control their data), and Transparent Operations (clear explanations of how data is handled and protected).

3. Why is privacy more than just compliance?

Privacy isn’t just about meeting legal standards like GDPR or HIPAA—it’s about earning and maintaining user trust. We see privacy as a driver of innovation and long-term differentiation, not a regulatory burden.

4. How is your business model aligned with privacy?

We’ve built a privacy-aligned business model based on subscriptions and enterprise partnerships, not data monetization. Our growth depends on delivering trusted, high-performing AI—not exploiting personal information.

Saurav Dhungana is the founder of Inductiv AI Labs, a privacy-first artificial intelligence company developing trustworthy AI systems for enterprise and individual users. With over a decade of experience engineering privacy-first AI systems, Saurav advocates for transparent, accountable artificial intelligence development.

Interested in the future of privacy-first AI? Click here to learn more about Inductiv AI Labs’ vision for trustworthy artificial intelligence.

Saurav Dhungana is the founder of Inductiv AI Labs, a privacy-first artificial intelligence company developing trustworthy AI systems for enterprise and individual users. With over a decade of experience engineering privacy-first AI systems, Saurav advocates for transparent, accountable artificial intelligence development.